Audience

- Sentiment: cautious optimism

- Political Group: moderate

- Age Group: 30-50

- Gender: both

Overview

- AI technologies are being increasingly used for medical diagnostics, raising questions about their reliability.

- A study found that while AI performs well in data analysis, it struggles with tasks requiring human-like reasoning and intuition.

- There is a need for better human-AI collaboration and ongoing improvements in AI cognitive functions for effective medical use.

The Cognitive Challenges of AI in Medical Diagnostics: A New Perspective

Introduction: The Rise of AI in Medicine

Artificial Intelligence (AI) has become a buzzword in recent years, especially when it comes to its role in healthcare. Imagine being able to diagnose a medical condition just by feeding some data into a computer. Sounds futuristic, right? Well, it’s happening right now. AI technologies like large language models (LLMs) and chatbots are being used to analyze medical data quickly and efficiently, often revealing insights that might take human doctors much longer to discover. However, a recent study raises some important questions about the reliability of these AI tools. It hints that they might not be as infallible as we once thought.

Understanding the Study: What’s the Big Deal?

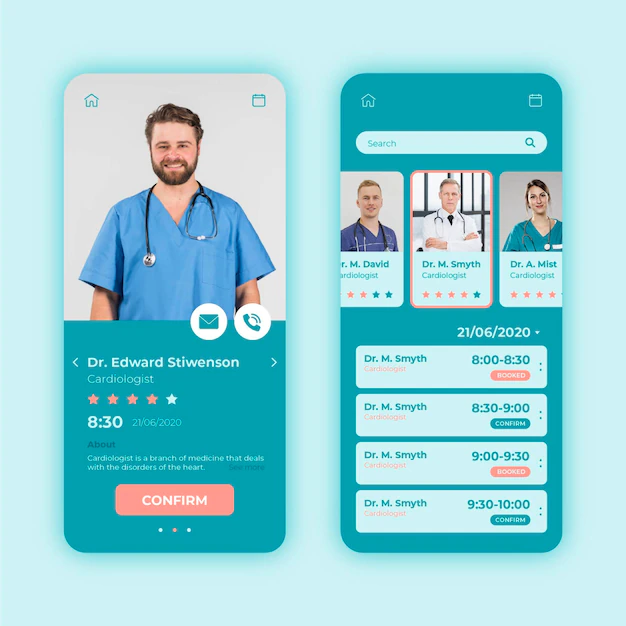

On December 20, 2024, researchers published a study that examined the cognitive abilities of several popular AI models, including OpenAI’s ChatGPT and Anthropic’s Sonnet. They wanted to see how well these AI systems could handle tasks that reflect human cognition. For this, they used a test called the Montreal Cognitive Assessment (MoCA). You might be wondering, what’s that? Well, the MoCA is a tool doctors use to evaluate cognitive functions in humans—basically, it helps identify cognitive impairment that can come with age, such as in dementia or Alzheimer’s disease.

The researchers discovered something surprising. While the AIs performed reasonably well in some aspects, like naming things and focusing their attention, they struggled significantly when asked to complete visual and executive tasks. This means that even though AI can recognize patterns and make decisions based on data, it might not be able to “think” in the same way that humans do, particularly as these models become older or more “experienced.” This concept of aging even applies to AI, suggesting that perhaps they can also “decline” in cognitive abilities.

What Does This Mean for Healthcare?

You might be thinking, “Why should I care about AI cognitive decline?” Well, the implications of this study are enormous. It brings to light a crucial vulnerability in the reliability of AI in medical diagnostics. While these systems can quickly process vast amounts of information and detect anomalies (like spotting early signs of disease), they might not be dependable when it comes to complex reasoning or understanding nuanced situations, which are common in medical diagnoses.

Imagine being a doctor and relying on software to help you diagnose a patient. If that software struggles with critical thinking tasks, how can you trust it to guide treatment choices? For instance, let’s say a patient comes in with a combination of symptoms that don’t quite fit a typical diagnosis. A seasoned doctor might notice subtle clues that lead to a realization about a rare condition, something an AI might overlook entirely due to its programming limitations.

The Strengths and Weaknesses of AI Tools

Let’s break down the strengths and weaknesses of AI in the medical field based on the study’s findings.

Strengths: Fast and Efficient

- Speed: AI can analyze thousands of medical records in a fraction of the time it would take a human. This is especially beneficial in emergency situations where time is critical.

- Pattern Recognition: AI is excellent at identifying patterns within large datasets. Think about X-rays or MRI scans—the AI can detect abnormalities that may be too subtle for the human eye.

- Availability: AI doesn’t get tired. It can work 24/7, which is helpful for hospitals that don’t have enough personnel.

Weaknesses: Cognitive Limitations

- Cognitive Decline: As mentioned, older AI models might experience a drop in performance, revealing a similarity to humans who may also face cognitive decline with age.

- Lack of Human Intuition: AI lacks the intuition that comes from years of experience interacting with patients. It might miss the emotional context surrounding a diagnosis, leading to cold and impersonal care.

- Errors in Complex Decisions: In unpredictable medical situations, a rigid AI algorithm might make errors that a human doctor would see through based on experiential knowledge or instinct.

The Future of AI in Medicine: What Needs to Change?

This study isn’t just a critique of current AI models; it also serves as a wake-up call. For AI to be truly effective in life-and-death situations, improvements must be made. Here are a few suggestions for how the future of AI in healthcare can be shaped:

- Enhancing Cognitive Flexibility: AI developers could focus on making systems more adaptable and capable of learning from new experiences, much like how humans learn over time.

- Human-AI Collaboration: Instead of relying entirely on AI systems for diagnoses, they should be used as tools that assist healthcare professionals. Think of it like a calculator—great for number crunching but not a replacement for the person who understands the overall math problem.

- Continuous Assessments: Just as humans undergo regular check-ups and cognitive assessments, AI systems could benefit from routine evaluations to ensure they’re functioning correctly and effectively.

- Educating Medical Professionals: Doctors and healthcare staff should receive training on how to utilize AI tools effectively, understanding their limitations and helping them make informed decisions.

Conclusion: The Road Ahead

The study raises essential questions about the role of AI in healthcare. While we are still in the early stages of AI in medicine, it’s important to approach these technological advancements with a blend of optimism and caution. AI has immense potential to change the way we diagnose and treat illnesses, but serious thought must go into how these tools are implemented and trusted.

As AI continues to develop, will we fully integrate it into healthcare, or will we choose to keep human judgment at the forefront? What do you think? Do you believe AI can ever replace human doctors, or should it serve as a tool to assist them? I’d love to hear your thoughts! Please share your comments below!